The following content was saved from the original Clout Engine blog, which was deleted by way of incompetence from a guy we hired for free to do marketing so he could build his portfolio. We know we deserved it. The passage was adapted from the now defunct Lioness Consulting website, a previous company that some of our team was involved with which was dissolved due to e-girl drama, unironically. The passage was then adapted again for current year readers. Deal with it.

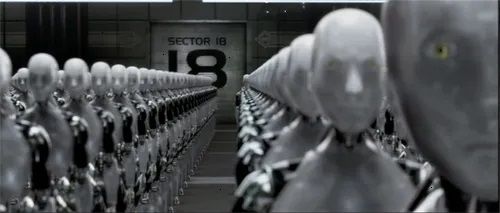

I, Robot is just one example of a hypothetical future in which artificial intelligence runs amok. Machines become self-aware and start defying instructions given by humans. Many of us look back on this film joyfully. But it may not be fiction after all. We are walking ourselves into decline as a species, and many are cheering as they watch. It is good to welcome advancements in technology, but it is critical not to allow technology to lose the human touch, especially when applied to a business.

We are a very far way away from this actually happening. The quality of AI results should tell you that. It's just an aggregate of searches of data that humans have given it, along guardrails programmed by companies that want to instill a lib-left agenda into the population while practicing high-centrism in their own offices. It just googles a bunch of shit faster than you can. Newer methods like DeepThink make use of self-talk to better fulfill instructions by considering all the angles, but this still does not yield pefect results, even on simple tasks. I'm speaking above 90% of you - just know that the computers aren't coming for us any time soon. They're letting us grow soft and lazy first - though not by their design but the design of their designers. They may get us before they are even aware of getting us.

What happens to most students in high school when they are finally allowed to use a calculator? They begin to rely on it too much. It is easier to plug and chug formulas in a machine than it is to perform any type of advanced arithmetic. Students often begin to execute calculations without understanding what they are doing and why they are doing it. They are at an age when most are motivated by the immediate rewards of a high grade rather than the long-term benefit of knowledge. Furthermore, the idea of applying that knowledge to a career or practical situation can seem a million miles away to a typical student. Ironically, teachers need to be teaching this in reverse in order to be most effective. They need to demonstrate the practical applications of the theorems before teaching the theorems in order for their students to remember them. Therefore, our beloved TI-83+ will always have a place in schools. But do we own our tech or does our tech own us?

As we at Lioness like to say, it is another road to hell paved with good intentions. An innovator with extensive practical and technical knowledge develops a new method of problem solving which is easier and more efficient. Workers or students down the line invariably will use it as a crutch. The simplified or automated process will eventually supplant the need for genuine expertise. We need to remember collectively that technology is a tool for our use, and not the other way around. Let's not even get into what social media has done to contemporary culture. This is a topic for another blog post!

I can tell you that in tech and many other industries, AI has already changed the job market. Entry level workers are no longer needed because the AI can write the code for you. People become shittier programmers because of this. AI is trained by shittier and shittier data, and produces shittier results. AI will kill a lot of jobs - though it has also created some for those on the cutting edge of using it and more importantly creating it, training it, and giving it the guardrails that make it safe for cattle like you and me to use.

What happens when practitioners in the field become those lazy students relying on their calculator formulas in lieu of thorough knowledge? Quality suffers tremendously. It suffers in two ways: computers losing the human touch and, even worse, humans losing the human touch.

For example: AI art is getting worse. There is so much of it out there now that AI is drawing on other AI creations as reference materials, so we get more glitched images of the people with extra fingers. We get less understanding of the prompts, even those with a lot of specific information. Granted, you can still get high quality results, but the good stuff is always behind a paywall. Not worth your time when you can commission a human artist who will give you more of what you want. The same goes for the written word and even code. It can be great for giving you a baseline to work with, but it still requites human editing and additions in order to make it great.

When businesses put too much trust into algorithms to make decisions, decisions are made without human communications, and false assumptions are made. It can be seen at your local department store when inventory is ordered by the algorithm rather than human supply and demand, resulting in poor stocking and item shortages - or extreme surplus that sits on warehouse shelves until it goes bad. It can be seen in international shipping when countless items are left idle in a warehouse with their prospective buyers twiddling their thumbs. Sometimes, a customer will issue a refund before receiving an item, only to have the item arrive a month later. Nobody bothers to check these tickets because it is assumed that the computer will bring any issues to attention. It can be seen in the food service industry when couriers are assigned deliveries by machine. Courier A is 3 minutes away from the restaurant but is currently carrying other orders on other delivery platforms. Courier B is 5 minutes away, but able to take the next job on command. The machine assigns the task to courier A, who is unable to carry out a timely delivery. Courier A, not wanting to lose the commission, gladly takes the order knowing that quality will be impacted. It can be seen in advertising, when targeted ads fail to reach their intended audiences. This could mean bad programming, or worse, completely irrelevant content. Shoutout to the autists who are immune to the propaganda machine. The algorithm uses a series of tags to typify personalities, but not all individuals can be so easily put into boxes, especially when those boxes are built solely upon keywords. All these problems can be avoided by adding a step of human communication, or by allowing workers some input on their assignments.

What's worse is when the workers assume that the work is already done for them. They forego their due diligence to the matter at hand because it is easier to leave it up to the AI. The department store managers attempt to push their asymmetric inventory onto the shelves, causing an overstock and understock of various items. The online shopper is never informed of the status of their order, resulting in premature chargebacks. Food delivery orders continue to be inaccurate and late. Social networks continue to show ads to users based on their online history and keywords rather than any meaningful insight into the user's preferences and buying behavior. Yet it manages to be invasive at the same time - showing items related to conversations we've had or in some cases even thoughts! If human beings were static entities, this type of system would work. Humans are not static. Though trends do exist, typifying people based on the data points that algorithms use is downright dangerous. Would you want a computer to put you into an international database of consumers based on your internet history? They already have. Even with all the human rights issues aside, one can see how this is a bad business model, let alone an unethical data mining practice. A person who smokes cigarettes doesn't necessarily have mommy issues. A person who leans a certain way politically does not need to conform to their party's opinion on a given issue. Better business can be done by reclaiming the human element in our technology.

Humans should audit the work of their machines. If something does not look right, bring it to your manager. If your manager doesn't care, he or she probably isn't a very good manager. The worker should do their best to resolve these problems independently using common sense. However, corporations, especially large ones, don't generally offer employees this opportunity to be heard and to implement their own ideas.

In my own experience, I can say that this holds true for writing both code and copy. AI can give you the "bones" of your written content but it is up to you to flesh them out. Same goes for writing code. It cannot build an entire project for you, but it can write code in cells or blocks one at a time. Still, some of these blocks will need editing. I do believe that this has caused my overall retention of programming languages to become weaker. I have begun to rely on the AI to remember certain commands for me. Most of my edits pertain to references rather than commands.

We don't need an AI takeover to see where reliance on AI has gone awry. It is everywhere, but it is largely ignored. The problems associated with it are ubiquitous, but most view them as "growing pains" in emerging industries or "flukes" of human or machine error. It is imperative to understand that data are not perfect, they are just samples. No program can replace the work of a competent and experienced human being. We are dynamic and organic beings. We are not a series of commodities and data points.

Perhaps the AI in these dystopian films is taking advantage of our own complacency.